Department of Physical Sciences, Meru University of Science and Technology (MUST)

Contents

- 1 About Meru University of Science and Technology

- 2 Meru University of Science and Technology Raspberry Pi Project

- 3 Executive Summary

- 4 Team Members

- 5 Methodology of Implementation and Assessment

- 6 Meru University RPI team first training

- 7 Launch of RPI to Computing students

- 8 RPI related projects in progress

- 9 Raspberry pi Tutor project

About Meru University of Science and Technology

Meru University of Science and Technology (MUST) is a young public university in Meru County of the Republic of Kenya having been chartered in March 2013 established under The Universities Act of 2012. Prior to the University status, MUST was a constituent college of Jomo Kenyatta University of Agriculture and Technology (JKUAT).

Meru University of Science and Technology Raspberry Pi Project

Meru University of Science and Technology has won a KENET Raspberry mini grant. This project is geared towards using a credit size gadget called Raspberry Pi (RPI) to teach computational units in the University. Raspberry Pi is a small device that was developed for teaching Computer Science in schools. Besides being portable, RPI is affordable and can be easily interfaced with the outside and used to perform automation projects.

As a pilot project, we will use RPI to teach three computational units on offer in the university during the April - August 2015 semester. We will also be conducting RPI related projects with an aim of publishing the results in referred journals. Students will be encouraged to develop their own projects using the pi and eventually showcase them within the University and other forums organized by RPI stake holders. Read more.

Executive Summary

The overall objective is to establish a Raspberry Pi teaching Laboratory in Meru University of Science and Technology (MUST). To achieve this a minimum of three computational units will be used to pilot the use of Raspberry Pi in teaching. Instructional manuals for teaching these units using Raspberry Pi will be developed and provided to the students. Student learning outcomes will be assessed throughout the semester. Publications regarding novel findings observed in the pilot stage will be presented in a refereed conference. Prior to the grant the university had already acquired a number of Raspberry Pi units and its accessories, developed exhibits of how Raspberry Pi can be used for teaching computer related courses in schools and showcased the projects in Commission for University Education exhibitions and ASK shows. Work is also in progress to develop a security surveillance system and an electronic device for early detection of crop diseases and other plant stress using Raspberry Pi. Upon successful completion of the pilot stage the university will upscale the use of Raspberry Pi in teaching more courses and launch a campaign for popularizing the adoption of Raspberry Pi in teaching computer related course in tertiary institutions of learning, secondary and primary schools within the environs of the university.

Team Members

- Mr. Daniel Maitethia Memeu - Department of Physical Sciences - Lead Researcher

- Mr. Ronoh Wycliffe – Department of Information Technology & Computer Science - Team member

- Mr. Abkul Orto - Department of Information Technology & Computer Science - Team member

- Mr. Kinuthia Mugi – Department of Engineering - Team member

Methodology of Implementation and Assessment

The University currently has four teaching computer laboratories fully connected to the internet. One of these computer labs will be used to establish a Raspberry pi based teaching laboratory. Facilities such as computer monitors, USB keyboards and mice, internet connectivity and computer desks will be shared by the existing PCs and the Raspberry Pi modules to be acquired. A suitable mode of embedding the Raspberry Pi modules in the system unit will be adopted.

Meru University RPI team first training

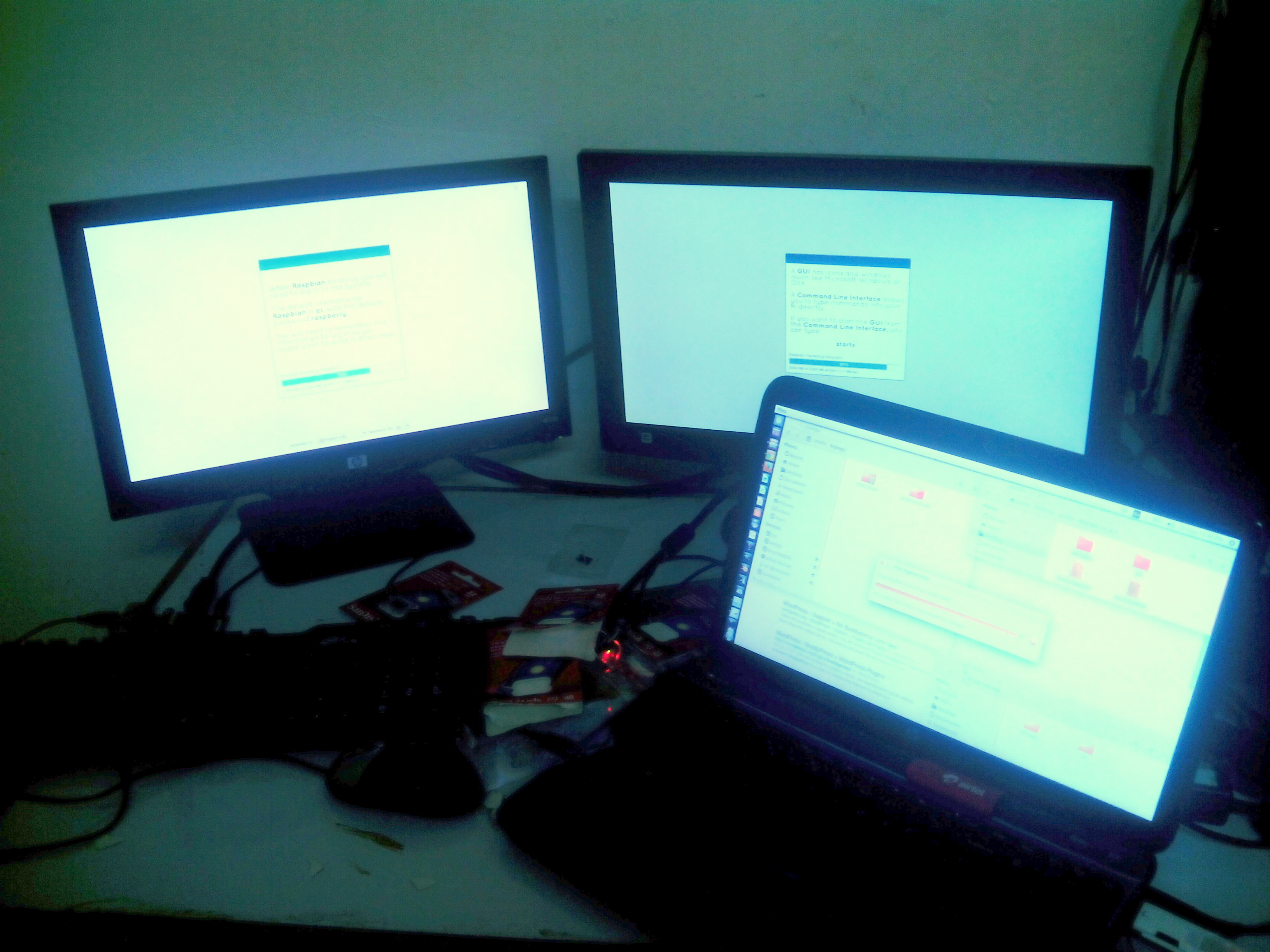

On 2nd march 2015 RPI project team underwent their first training on getting started with RPI. The course focused on the key components of the Pi, downloading and load the Raspbian (Raspberry Pi official OS) to the SD card, powering up the Pi, configuring the pi OS, launching basic Pi applications for word processing, spread sheet, presentation and internet browsing. Members were also introduced to Python programming using the Python IDE which comes with Raspbian.

Launch of RPI to Computing students

On 15th March 2015, the Meru University RPI team introduced RPI to computer science students. The students were explained the capabilities of the gadgets with demonstrations. In this meeting students resolved to organize themselves into groups to learn and develop more RPI applications.

RPI imaging module for crop stress monitoring

We are developing a system for detecting crop stress in green houses using Raspberry imaging module. The module comprises of the RPI connected to RPI camera. An executable Linux script for periodically taking photos is written and incorporated to the Linux task scheduler (cron) so that upon reboot the pi starts taking photos after a specified period. The system is powered by solar. So far the imaging module is complete and has been tested in the green house and has been found to be working fine. We are now working on suitable algorithms for processing the images captured for stress. We intend to use python and open CV imaging processing library to accomplish the task.

Raspberry pi Tutor project

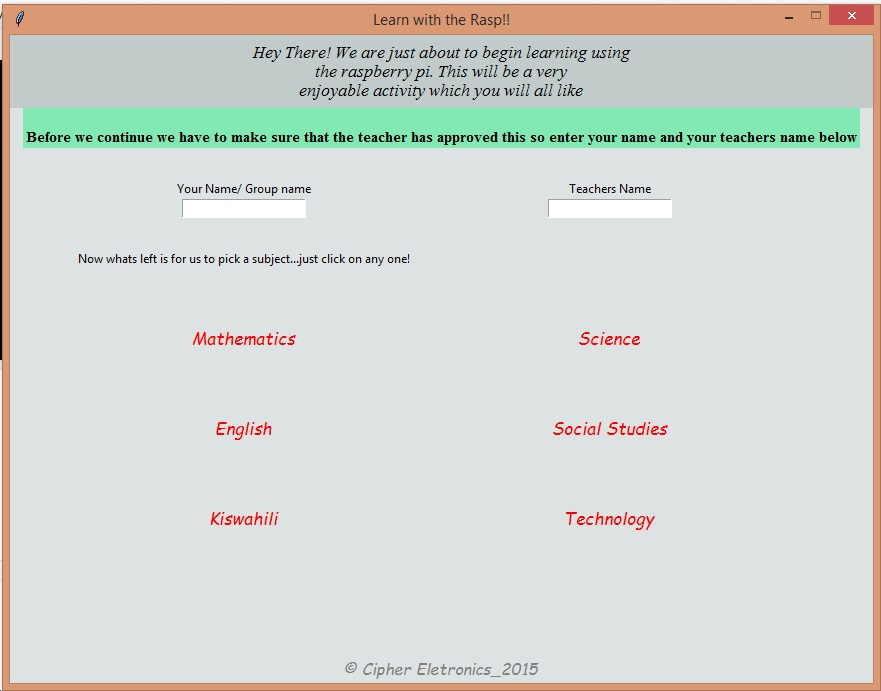

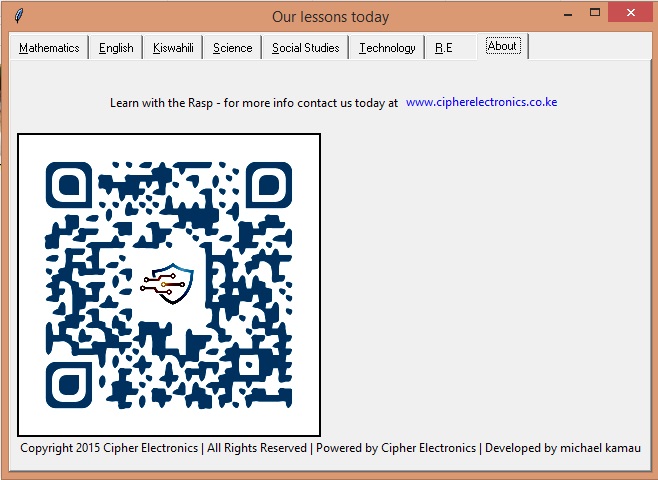

Meru University Raspberry pi team is working on making Raspberry pi an ideal gadget for learning. The team is specifically developing educational applications for running on the Pi geared at making learning interesting right from early childhood. So far applications incorporating elementary English, mathematics, and technology lessons have been developed. Images, videos, and sound are used as learning aids in these subjects.

The project has been showcased in various forums including the Commission of University exhibition held in Machakos in from 19th to 21st March, 2015, the NACOSTI exhibition week held in the University of Nairobi from 11th to 16th May 2015, and the Meru National ASK show held in Meru ASK show ground from 3rd to 6th June, 2015. members of the public were interested by the capabilities of the little device and agreed the Pi can make a great learning companion to the pupils and students as well as teachers.

The team main agenda as pertaining this project is to advocate for the adoption of the Raspberry pi (RPI) as an alternative to desktop/laptops/tablets computers in learning of IT related courses.

The presentation was categorized as an exhibition, and apart from showcasing our amazing gadget, the team was also able to interact and learn where to improve and the many possible challenges that the project is likely to encounter in the near future.

PROJECT DESCRIPTION (By developers: students from Meru University)

Target Audience: Class 1-4 students, Teachers

Application: Teaching Aid, Student management

Detailed Description:

“CHEESE” is an innovative program that is aimed at teaching young students different subjects in an immersive and well interactive manner.

The Graphical user interface is developed in such a way to allow different students to log in so as to start the lesson

The student is required to type in their name and the teachers name after which a log is kept.

The teacher can then use the log to trace and make a follow up of which students were present for the class and also be able to access the student performance.

The program also allows working in groups by allowing login of different group members. This data is very useful to the teacher for progress assessment and grading.

From the login phase the student can choose from a range of different subject. Our personal favorite is Technology as a subsidiary of Science. In this subject the program present different logos and graphics and the students is supposed to recognize them and key in the correct answer. Points are awarded for correct answers.

But thats not all.

Teachers also have the ability to upload their own images that they feel may be appropriate and defining the correct answers thereby further extending the capability of the program.

This is because during the development of this program we not only have the students in mind but also the teachers who are supposed to be supervising the students.

There is a total of six subjects that students can select from:

| SUBJECT | DESCRIPTION |

|---|---|

| Mathematics |

The subject has simple arithmetic questions that the student is tasked with finding the answers to, awarding points for each correct answer answered |

|

English |

Aimed at the young children, this subject requires that the student identifies pictures and spells the correctly |

|

Kiswahili |

Graphics is core to the subject with students being tasked with translating and spelling different pictures. |

|

Christian Education |

Morals are an important lesson both to us and the society, which is why as part of our development in the project we designed simple stories some borrowed from famous tales to teach children different morals and life skills |

|

Social Studies |

<Under development> |

|

Music |

<Under development> |

|

Art and Craft |

<Under development> |

|

Technology |

In this subject the program present different logos and graphics and the students is supposed to recognize them and key in the correct answer. |

|

NB: For all of the above subjects, point are awarded for each correct answer |

Developed by Mr. Michael Kamau and Steve Kisinga, both students from Meru University, we believe that the application has huge potential as a teaching aid as well as a good student management program that is user friendly.

We also presented the same project at the Meru ASK show where we scooped various awards, among them being the best innovation stand. Major efforts are being carried at ensuring that the project succeeds, and a lot of dedication is being displayed by the team members

RASPBERRY AS A TEACHING AID

CIT 3102 – FUNDAMENTALS OF COMPUTER PROGRAMMING

The raspberry pi has been used in Meru University as a teaching aid to teach among other units, Fundamentals of computer programming.

Students were introduced to the raspberry pi, what it is, it's capabilities and its advantages. As part of the lesson students were required to use the raspberry pi to access the internet and use various search engines to find out the technical specifications of the device.

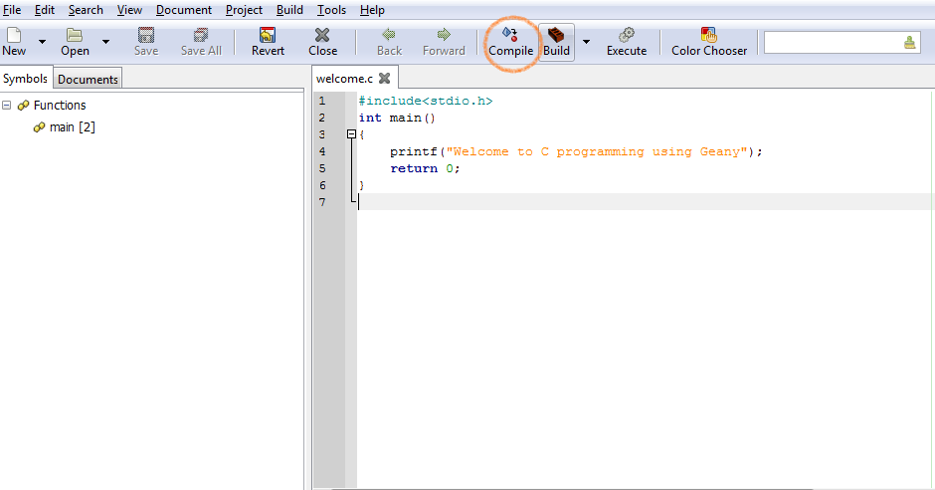

Students were intoduced to programming basic programming and taught basic proramming skills. Geany was used as the IDE of choice to teach C programming. Ranging from displaying simple text on the terminal to the more complex programming skills of assigning variables and accepting input, the raspberry pi has offered a great deal in helping teach the unit.

==

Introduction Raspberry Pi and GPIO programming

Professor Simon Cox and his team at the University of Southampton connected 64 Raspberry Pi boards to build

an experimental supercomputer, held together with Lego bricks.

The project was able to cut the cost of a supercomputer from millions of dollars to thousands or even hundreds.

Introduction

System on a Chip

What does System on a Chip (SoC)mean?

A system on a chip (SoC) combines the required electronic circuits of various computer components onto

a single, integrated chip (IC).

A SoC is a complete electronic substrate system that may contain analog, digital, mixed-signal or radio frequency

functions.

Its components usually include a graphical processing unit (GPU), a central processing unit (CPU) that may be

multi-core, and system memory (RAM).

Because a SoC includes both the hardware and software, it uses less power, has better performance, requires less

space and is more reliable than multi-chip systems. Most system-on-chips today come inside mobile devices like

smartphones and tablets.

A distinction between a microprocessor and a microcontroller should also be explained.

A microprocessor is an integrated circuit (IC) which has only the central processing unit (CPU) on it. Such as the

Intel i7 CPU.

Microcontrollers have a CPU, memory, and other peripherals embedded on them.

A microcontroller can be programmed to perform certain functions.

A very popular microcontroller is the Arduino Uno.

The difference between a SoC and a microcontroller often times is defined by the amount of random access

memory (RAM).

A SoC is capable of running its own operating system.

System on a Chip

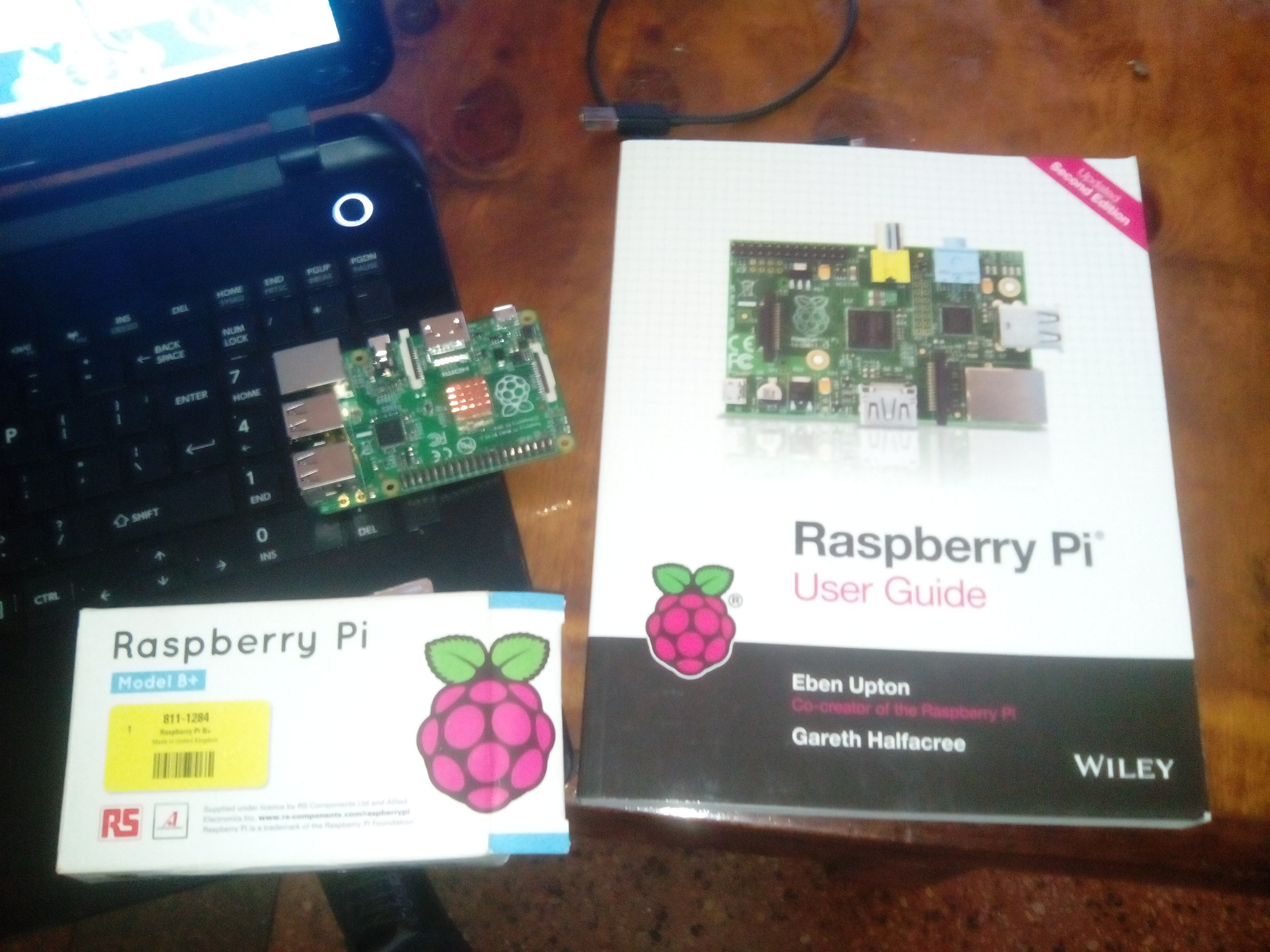

The SoC we will use today is the Raspberry Pi B+.

The heart of this credit card sized computer is the Broadcom BCM2835 chipset that contains an ARM CPU

and a Videocore4 graphics processing unit.

The B+ has a 700 MhzCPU and 512 MB of RAM.

Pi Assembly and Raspbian Install

We will now discuss how to assemble the components that make up the Raspberry Pi.

You will need the following (should be on the bench):

•Raspberry Pi B+ board

•HDMI to DVI cable

•Monitor or TV

•Micro SD card

•USB keyboard and mouse

•Micro USB power supply

Pi Assembly and Raspbian Install

We will now bake (assemble) the Pi!

Once you have it put together, power it on.

You should see the New Out Of Box Software (NOOBS) operating system installer.

Select the Raspbian [RECOMMENDED] option, change the language to English (US), change the keyboard to US, and

click install.

While the OS is installing we will discuss some electronics information.

Voltage, Current, and Resistance

When a potential difference exists between two charged bodies that are connected by a conductor, electrons flow

in the conductor. This flow is from the negatively charged body to the positively charged body.

The voltage does not flow, only the charge. Voltage supplies the “push” or “pressure”.

Plumbing Analogy

It is difficult to keep straight the concepts of voltage, current, charge, resistance, etc., but for

electrical circuits there is a helpful analogy with plumbing systems. Wires are, of course, like pipes;

current is like the rate of water flow (gallons per minute); and resistance is like friction in the

pipes. Now, here is the most helpful part of the analogy: voltage is like water pressure, and

batteries and generators are like pumps. Like all analogies, however, it doesn't quite work.

Voltage, Current, and Resistance

Voltage can also be looked at as water pressure.We are mainly concerned with the potential

difference between two points.

Ground is the reference point and is considered to have zero potential.

Electric current –directed flow of free electrons.

Moves from a region of negative potential to a region of positive potential.

Therefore it can be stated that electric current flows from negative to positive.

Voltage, Current, and Resistance

Breadboarding Basics

What is a Breadboard?

What circuit are we going to breadboard today?

Are these wires connected?

Which is Correct???

Longer

Lead of

LED goes

on top

Connect Circuit to Pi

Now that you have a working resistor and LED circuit, we can now connect it to the Raspberry Pi.

Located on the Pi is a 40 pin general purpose input output (GPIO) connector.

The 40 pins can be connected to various inputs or outputs. In our experiment we will connect our LED circuit to two of the outputs.

To connect the GPIO header to the breadboard we will

use a 40 pin cable.

The red stripe on the cable indicates pin number 1, which

is in the upper left corner of the GPIO header.

We will connect the ribbon cable as follows:

Pin 6 will be used for ground (common to both LEDs)

Pin 11 will be used for our output to the Blue LED

Pin 12 will be used for our output to the Red LED

Connect a black or brown wire to pin 6, and a red or orange wire to pin 11 and pin 12 on the ribbon cable.

Plug the black wire to the negative side of the circuit and the red wire to the positive side.

Your project should look like this:

Program the Pi

Now that the Pi is setup and the electronics are connected, we will now begin programming the GPIO output using a programming language known as Python.Python is an interpreted, object-oriented, high-level programing language.Python’s simple, easy to learn syntax emphasizes readability, which makes it easy to maintain and update.Python is included in the Raspbian distribution.We will run a simple python program to verify that our Pi is ready to go.Logon to the Pi with the username of piand the password of raspberryProgram the Pi

You should now see the shell prompt like:

Type sudopythonand you should see the following:

Type quit() to exit from the python interpreter.

This shows us that Python is installed and ready for action.

Program the Pi

We will now write our first Python program.

Type sudonanohelloworld.py and press Enter.

Nanois a text editor that can be used to type our code.

Type print “Hello World!”;

Once you have the code written, press CTRLand xat the same time and press y to save the file.

Now type sudopython helloworld.py You should see:

We will now write some code to control the LED circuit.

Type sudonanoled.py and press Enter.

import RPi.GPIOas GPIO

import time

GPIO.setmode(GPIO.BOARD)

GPIO.setup(11,GPIO.OUT)

GPIO.output(11,GPIO.HIGH)

time.sleep(1)

GPIO.output(11,GPIO.LOW)

GPIO.cleanup()

Note, these are capital O’s, not 0’s (zeroes).

Add code to light up pin 12.

Program the Pi

Once you have the code written, press CTRLand xat the

same time and press y to save the file.

Now type sudo python led.pyYou should see your LEDs

light up for 1 second and turn off.

Modify your code to make the LEDs blink 3 times.

Program the Pi

import RPi.GPIOas GPIO

import time

GPIO.setmode(GPIO.BOARD)

GPIO.setup(11,GPIO.OUT)

for iin range (0,3):

GPIO.output(11, GPIO.HIGH)

time.sleep(1)

GPIO.output(11, GPIO.LOW)

time.sleep(1)

GPIO.cleanup()

{Be sure to use a tab instead of space here

Add code

for pin 12!

Putting it all Together

You now have the skills to build a complete light show

system!

Enjoy!

Introduction to Python

A readable, dynamic, pleasant, flexible, fast and powerful language

Nowell Strite

Manager of Tech Solutions @ PBSnowell@strite.org

Overview

•Background

•Syntax

•Types / Operators / Control Flow

•Functions

•Classes

•Tools

What is Python

•Multi-purpose (Web, GUI, Scripting, etc.)

•Object Oriented

•Interpreted

•Strongly typed and Dynamically typed

•Focus on readability and productivity

Features

•Batteries Included

•Everything is an Object

•Interactive Shell

•Strong Introspection

•Cross Platform

•CPython, Jython, IronPython, PyPy

Who Uses Python

•Google

•PBS

•NASA

•Library of Congress

•the ONION

•...the list goes on...

Releases

•Created in 1989 by Guido Van Rossum

•Python 1.0 released in 1994

•Python 2.0 released in 2000

•Python 3.0 released in 2008

•Python 2.7 is the recommended

version

•3.0 adoption will take a few years

Syntax

Hello World

hello_world.py

Indentation

•Most languages don’t care about indentation

•Most humans do

•We tend to group similar things together

IndentationThe else here actually belongs to the 2nd if statement

IndentationThe else here actually belongs to the 2nd if statement

IndentationI knew a coder like this

IndentationYou should always be explicit

Indentation

Python embraces indentation

Comments

Types

Strings

Numbers

Null

Lists

Lists

Dictionaries

Dictionary Methods

Booleans

Operators

Arithmetic

String Manipulation

Logical Comparison

Identity Comparison

Arithmetic

Comparison

Control Flow

Conditionals

For Loop

Expanded For Loop While Loop

List Comprehensions

•Useful for replacing simple for-loops.

Functions

Basic Function

Function Arguments

Arbitrary Arguments

Fibonacci

Fibonacci Generator

Classes

Class Declaration

Class Attributes

•Attributes assigned at class

declaration should always be

immutable

Class Methods

Class Instantiation & Attribute Access

Class Inheritance

Python’s Way

•No interfaces

•No real private attributes/functions

•Private attributes start (but do not end) with double underscores.

•Special class methods start and end with double underscores.

•__init__, __doc__, __cmp__,

__str__

Imports

•Allows code isolation and re-use

•Adds references to variables/classes/functions/etc. into current namespace

Imports

More Imports

Error Handling

Documentation

Docstrings

Tools

Web Frameworks

•Django

•Flask

•Pylons

•TurboGears

•Zope

•Grok

IDEs

•Emacs

•Vim

•Komodo

•PyCharm

•Eclipse (PyDev)

Package Management

Resources

•http://python.org/

•http://diveintopython.org/

•http://djangoproject.com/

Example

Going Further

•Decorators

•Context Managers

•Lambda functions

•Generators

COMPUTER VISION USING SIMPLECV AND THE RASPBERRY PI

Reference:

Practical Computer Vision with SimpleCV -Demaagd (2012)

Enabling Computers To See

SimpleCV is an open source framework for building computer vision applications.

With it, you get access to several high-powered computer vision libraries such as OpenCV – without having to first learn about bit depths, file formats, color spaces, buffer management, eigenvalues, or matrix versus bitmap storage.

This is computer vision made easy.

SimpleCV is an open source framework

It is a collection of libraries and software that you can use to develop vision applications.

It lets you work with the images or video streams that come from webcams, Kinects, FireWire and IP cameras, or mobile phones.

It’s helps you build software to make your various technologies not only see the world, but understand it

too.

SimpleCV is written in Python, and it's free to use. It runs on Mac, Windows, and Ubuntu Linux, and it's licensed

under the BSD license.

Features

Convenient "Superpack" installation for rapid deployment Feature detection and discrimination of Corners, Edges,

Blobs, and Barcodes Filter and sort image features by their location, color, quality, and/or size An integrated iPython interactive shell makes developing code easy

Image manipulation and format conversion

Capture and process video streams from Kinect, Webcams, Firewire, IP Cams, or even mobile phones Learn how to build your own computer vision (CV) applications quickly and easily with SimpleCV. You can access the book online through the Safari collection of the ITESM CEM Digital Library.

SimpleCV Framework 6

Installing SimpleCV for Ubuntu is done through a .deb package. From the SimpleCV download page, click the latest stable release link. This will download the package and handle the installation of all the required dependencies.

From the command line, type the following two commands: $ sudo apt-get install ipython python-opencv pythonscipy python-numpy python-pygame python-setuptoolspython-pip

$ sudo pip install https://github.com/ingenuitas/SimpleCV/zipball/master

The first command installs the required Python packages, and the second

command uses the newly installed pip tool to install the latest version ofSimpleCV from the GitHub repository.

Once everything is installed, you type 'simplecv' on the command line to launch the SimpleCV interactive shell.

Note, however, that even recent distributions of Ubuntu may have an outdated version of OpenCV, one of the major dependencies for SimpleCV. If the installation

throws errors with OpenCV, in a Terminal window enter: $ sudo add-apt-repository ppa:gijzelaar/opencv2.3$ sudo apt-get update

Once SimpleCV is installed, start the SimpleCV interactive Python shell by opening a command prompt and entering python -m SimpleCV.__init__. A majority

of the examples can be completed from the SimpleCV shell.

Hello World

from SimpleCV import Camera, Display, Image# Initialize the camera

cam = Camera()

# Initialize the displaydisplay = Display()

# Snap a picture using the cameraimg = cam.getImage()

# Show the picture on the screenimg.save(display)

Retrieve an Image-object from the camera with the highest quality possible.

from SimpleCV import Camera, Display, Imageimport time

- Initialize the camera

cam = Camera()

- Initialize the display

display = Display()

- Snap a picture using the camera

img = cam.getImage()

- Show some text

img.drawText("Hello World!")

- Show the picture on the screen

img.save(display)

- Wait five seconds so the window doesn't close right away

time.sleep(5)

The shell is built using IPython, an interactive shell for Python development.

Most of the example code in this book is written so that it could be executed as a standalone script.

However, this code can be still be run in the SimpleCV shell.

The interactive tutorial is started with the tutorial command: >>> tutorial

Introduction to the camera

The simplest setup is a computer with a built-in webcam or an external video camera. These usually fall into a category called a USB Video Class (UVC)

device. UVC has emerged as a “device class” which provides a standard way to control video streaming over USB. Most webcams today are now supported by UVC, and do not require additional drivers for basic operation. Not all UVC devices support all functions, so when in doubt, tinker with the camera in a program like guvcview to see what works and what does not. RPi Verified Peripherals: USB Webcams

Camera Initialization

The following line of code initializes the camera: from SimpleCV import Camera

- Initialize the camera

cam = Camera()

..This approach will work when dealing with just one

camera, using the default camera resolution, without

needing any special calibration.

Shortcut when the goal is simply to initialize the camera and

make sure that it is working:from SimpleCV import Camera

- Initialize the camera

cam = Camera()

- Capture and image and display it

cam.getImage().show()

The show() function simply pops up the image from the

camera on the screen. It is often necessary to store the

image in a variable for additional manipulation instead ofsimply calling show() after getImage(), but this is a

good block of code for a quick test to make sure that the

camera is properly initialized and capturing video.

Example output of the basic camera example

To access more than one camera, pass the camera_id as an

argument to the Camera() constructor.

•

On Linux, all peripheral devices have a file created for them in the

/dev directory. For cameras, the file names start with video and end

with the camera ID, such as /dev/video0 and /dev/video1. from SimpleCV import Camera

- First attached camera

cam0 = Camera(0)

- Second attached camera

cam1 = Camera(1)

- Show a picture from the first camera

cam0.getImage().show()

- Show a picture from the second camera

cam1.getImage().show()

The SimpleCV framework can control many other camera properties. An easy example is forcing the resolution. Almost every webcam supports the standard resolutions of 320×240, and 640×480 (often called “VGA” resolution). Many newer webcams can handle higher resolutions such as 800×600, 1024×768, 1200×1024, or

1600×1200. Here is an example of how to move text to the upper left quadrant of an image, starting at the coordinates (160, 120) on a 640×480 image:

from SimpleCV import Camera

cam = Camera(0, { "width": 640, "height": 480 }

img = cam.getImage(

)

img.drawText("Hello World", 160, 120)

img.show(

)

Notice that the camera’s constructor passed a new argument in the form of{"key":value}

Drawing on Images in SimpleCV 20

Layers

Layers can be unlimited and what's ever above or on top ofthe other layer will cover the

layer below it. Let's look at the simplecv logo with some text written on it.

•

Which in reality is an image with 3 layers, each layer has the text displayed. If we rotate the image and expand the

layers you can get a better idea of what is really happening with image layers.>>> scv = Image('simplecv')

>>> logo = Image('logo')

>>> #write image 2 on top of image

>>> scv.dl().blit(logo)

NOTE: Run help DrawingLayer for more information.

Here is an example of how to move text to the upper left quadrant of an image, starting at the coordinates (160, 120) on a 640×480 image: from SimpleCV import Camera

cam = Camera(0, { "width": 640, "height": 480 }

img = cam.getImage()

img.drawText("Hello World", 160, 120)

img.show(

)

Camera class attributes

The Camera() function has a properties argument for basic cameracalibration. Multiple properties are passed in as a comma delimited list, with the entire list enclosed in brackets.

Note that the camera ID number is NOT passed inside the brackets, since it is a separate argument. The configuration options are:

- width and height

- brightness

- contrast

- saturation

- hue

- gain

- exposure

The available options are part of the computer’s UVC system.

A live camera feed

To get live video feed from the camera, use the live() function. from SimpleCV import Camera

cam = Camera(

)

cam.live(

)

In addition to displaying live video feed, the live() function has two other very useful properties. The live feed makes it easy to find both the coordinates and the color of a pixel on the screen. To get the coordinates or color for a pixel, use the live() function as outlined in the example above. After the window showing the video feed appears, click the left mouse button on the image for the pixel of interest. The coordinates and color for the pixel at that location will then be displayed on the screen and also output to the shell. The coordinates will be in the (x, y) format, and the color will be displayed as an RGB triplet (R,G,B).

Demonstration of the live feed

To control the closing of a window based on the user interaction with the window:

from SimpleCV import Display, Imageimport time

display = Display()

Image("logo").save(display)

print "I launched a window"

# This while loop will keep looping untilthe window is closed

while not display.isDone():

time.sleep(0.1)

print "You closed the window"

The user will not be able to close the window by clicking the close button in the corner of the window. Checks the event queue and returns True if a quit event has been issued. It outputs to thecommand prompt, and not the image. Information about the mouse

While the window is open, the following information about

the mouse is available:

mouseX and mouseY (Display class)

The coordinates of the mouse

mouseLeft, mouseRight, and mouseMiddle

Events triggered when the left, right, or middle buttons on the mouse are clicked

mouseWheelUp and mouseWheelDown Events triggered then the scroll wheel on the mouse is moved

How to draw on a screen from SimpleCV import Display, Image, Color

winsize = (640,480)

display = Display(winsize)

img = Image(winsize)

If the button is clicked, draw the circle.

img.save(display)

The image has a drawing layer, which is

accessed with the dl() function. The

drawing layer then provides access to

while not display.isDone(): the circle() function. if display.mouseLeft:

img.dl().circle((display.mouseX,display.mouseY),4,

Color.WHITE, filled=True)

img.save(display)

img.save("painting.png"

)

Example using the drawing application

The little circles from the drawing act like a paint brush, coloring in a small region of the screen wherever the mouse is clicked.

Examples=from SimpleCV import Camera, Imageimport time cam = Camera()

- Set the number of frames to capture

numFrames = 10

- Loop until we reach the limit set in numFrames

for x in range(0, numFrames):

img = cam.getImage()

filepath = "image-" + str(x) + ".jpg"

img.save(filepath)

print "Saved image to: " + filepath

time.sleep(60)

Color

Introduction

Although colorsounds like a relatively straightforward concept, differentrepresentations ofcolor are useful in different contexts.

The following examples workwith an image ofThe Starry Night by Vincent van Gogh (1889)

In the SimpleCV framework, the colors of an individual pixel are extracted with the getPixel() function. from SimpleCV import Imageimg = Image('starry_night.png')

print img.getPixel(0, 0) Prints the RGB triplet for the pixel at (0,0), which will equal (71.0, 65.0, 54.0).

Example RGB

Original Image R-Component

G-Component B-Component

HSV

One criticism of RGB is that it does not specifically model luminance. Yet the luminance/brightness is one of the most common properties to manipulate.In theory, the luminance is the relationship of the of R, G, and B values. In practice, however, it is sometimes more convenient to separate the color values from the luminance values. The solution is HSV, which stands for hue, saturation, and value. The color is defined according to the hue and saturation, while value is the measure of the luminance/brightness.

The HSV color space is essentially just a transformation of the RGB color space because all colors in the RGB space have a corresponding unique color in the HSV space, and vice versa.

Example HSV

Original Image Hue

Saturation Value (Intensity)

RGB ñ

HSV

The HSV color space is often used by people because it corresponds better to how people experience color than the RGB color space does.

It is easy to convert images between the RGB and HSV color spaces, as is demonstrated below. from SimpleCV import Imageimg = Image('starry_night.png')hsv = img.toHSV()

print hsv.getPixel(25,25)

rgb = hsv.toRGB()

print rgb.getPixel(25,25)

It converts the image from the original RGB to HSV.

it prints the HSV

values for the pixel

It converts the image back

to RGB

it prints the HSV

values for the pixel

The HSV color space is particularly useful when dealing with an object that has a lot of specular highlights or reflections.In the HSV color space, specular reflections will have a

high luminance value (V) and a lower saturation (S) component. The hue (H) component may get noisy depending on how bright the reflection is, but an object of solid color

will have largely the same hue even under variable lighting. A grayscale image represents the luminance of the image, but lacks any color components.

An 8-bit grayscale image has many shades of grey, usually on a scale from 0 to 255.

The challenge is to create a single value from 0 to 255 out of the three values of red, green, and blue found in an RGB image. There is no single scheme for doing this, but it is done by taking a weighted average of the three.

from SimpleCV import Imageimg = Image('starry_night.png')

gray = img.grayscale()

print gray.getPixel(0,0)

getPixel returns the same number three times. This keeps a consistent format with RGB and HSV, which both return three values. To get the grayscale value for a particular pixel

without having to convert the image to grayscale,

use getGrayPixel().

The Starry Night, converted to grayscale Segmentation is the process of dividing an image into areas of related content.Color segmentation is based on subtracting away the

pixels that are far away from the target color, while preserving the pixels that are similar to the color.

The Image class has a function calledcolorDistance() that computes the distance between every pixel in an image and a given color.

This function takes as an argument the RGB value of the target color, and it returns another image representing the distance from the specified color. Segmentation Example

46

from SimpleCV import Image, ColoryellowTool = Image("yellowtool.png")

yellowDistBin = yellowDist.binarize(50).invert()

onlyYellow = yellowTool -yellowDistBinonlyYellow.show()

yellowDist = yellowTool.colorDistance((223, 191, 29))1

Basic Feature Detection

Introduction

The human brain does a lot of pattern recognition to makesense of raw visual inputs. After the eye focuses on an object, the brain identifies the characteristics of the object —such as its shape, color, or texture— and then compares these to the characteristics offamiliar objects to match and recognize the object.

In computer vision, that process of deciding what to focus on is called feature detection. A feature can be formally defined as “one or more measurements of some quantifiable property of an object, computed so that it quantifies some significant characteristics ofthe object” (Kenneth R. Castleman, Digital Image Processing, Prentice Hall, 1996).

Easier way to think of it: a feature is an “interesting” part of an image.

A good vision system should not waste time—or processing power—analyzing the unimportant or uninteresting parts of an image, so feature detection helps determine which pixels to focus on. In this session we will focus on the most basic types of features: blobs, lines, circles, and corners. If the detection is robust, a feature is something that could be reliably detected across multiple images.

Detection criteria

How we describe the feature can also determine the situations in which we can detect the feature. Our detection criteria for the feature determines whether we can: Find the features in different locations of the picture (position invariant) Find the feature if it’s large or small, near or far (scale invariant)Find the feature if it’s rotated at different orientations

(rotation invariant)

Blobs

51

•

Blobs are objects or connected components,

regions of similar pixels in an image.

•

Examples:

a group of brownish pixels together, which might

represent food in a pet food detector.

•

a group of shiny metal looking pixels, which on a

door detector would represent the door knob

•

a group of matte white pixels, which on a

medicine bottle detector could represent the cap.

•

Blobs are valuable in machine vision because

many things can be described as an area of

a certain color or shade in contrast to a

background.

52

52

s

•

findBlobs() can be used to find objects that are

lightly colored in an image. If no parameters are

specified, the function tries to automatically detect

what is bright and what is dark.

Left: Original image of pennies; Right: Blobs detected

Blob measurements

53

After a blob is identified we can:

•

measure a lot of different things:

•

area

•

width and height

•

find the centroid

•

count the number of blobs

•

look at the color of blobs

•

look at its angle to see its rotation

•

find how close it is to a circle, square, or rectangle —or

compare its shape to another blob

Blob detection and measurement

from SimpleCV import Imagepennies = Image("pennies.png")

binPen = pennies.binarize()

blobs = binPen.findBlobs()

blobs.show(width=5)

54

1

2

3

1.

Blobs are most easily detected on a binarized image.

2.

Since no arguments are being passed to the findBlobs() function, it

returns a FeatureSet

•

list of features about the blobs found

•

has a set of defined methods that are useful when handling features

3.

show() function is being called on blobs and not the Image object

•

It draws each feature in the FeatureSet on top of the original image and

then displays the results.

Blob detection and measurement (3)

55

•

After the blob is found, several other functions

provide basic information about the feature, such as

its size, location, and orientation.

from SimpleCV import Image

pennies = Image("pennies.png"

)

binPen = pennies.binarize(

)

blobs = binPen.findBlobs(

)

print "Areas: ", blobs.area(

)

print "Angles: ", blobs.angle(

)

print "Centers: ", blobs.coordinates(

)

Blob detection and measurement (2)

56

•

area function: returns an array of the area of each

feature in pixels.

•

By default, the blobs are sorted by size, so the areas should

be ascending in size.

•

angle function: returns an array of the angles, as

measured in degrees, for each feature.

•

The angle is the measure of rotation of the feature away

from the x-axis, which is the 0 point. (+: counter-clockwise

rotation; -: , clockwise rotation)

•

coordinates function: returns a two-dimensional array of

the (x, y) coordinates for the center of each feature.

57

57

s

•

If the objects of interest are darkly colored on a

light background, use the invert() function.

from SimpleCV import Imageimg = Image("chessmen.png")

invImg = img.invert()

blobs = invImg.findBlobs()

blobs.show(width=2)

img.addDrawingLayer(invImg.dl())

img.show()

1

2

3

4

58

58

)

1.

invert() function: turns the black chess pieces white and

turns the white background to black

2.

findBlobs() function: can then find the lightly colored

blobs as it normally does.

3.

Show the blobs. Note, however, that this function will

show the blobs on the inverted image, not the original

image.

4.

To make the blobs appear on the original image, take

the drawing layer from the inverted image (which is

where the blob lines were drawn), and add that layer

to the original image.

59

59

•

In many cases, the actual color is more important

than the brightness or darkness of the objects.

•

Example: find the blobs that represent the blue

candies

Finding Blobs of a Specific Color (2)

60

from SimpleCV import Color, Imageimg = Image("mandms.png")

blue_distance =

img.colorDistance(Color.BLUE).invert()

blobs = blue_distance.findBlobs()

blobs.draw(color=Color.PUCE, width=3)

blue_distance.show()

img.addDrawingLayer(blue_distance.dl())

img.show()

1

2

3

4

Left: the original image; Center: blobs based on the

blue distance; Right: The blobs on the original image

Finding Blobs of a Specific Color (3)

61

1.

colorDistance() function: returns an image that shows how

far away the colors in the original image are from the

passed in Color.BLUE argument.

•

To make this even more accurate, we could find the RGB triplet

for the actual blue color on the candy.

•

Because any colors close to blue are black and colors far awayfrom blue are white, we again use the invert() function to switchthe target blue colors to white instead.

2.

We use the new image to find the blobs representing the

blue candies.

•

We can also fine-tune what the findBlobs() function discovers bypassing in a threshold argument. The threshold can either be an

integer or an RGB triplet. When a threshold value is passed in,

the function changes any pixels that are darker than the

threshold value to white and any pixels above the value to

black.

Finding Blobs of a Specific Color (4)

62

3.

In the previous examples, we have used the

FeatureSet show() method instead of these two lines

(blobs.show()). That would also work here. We’ve

broken this out into the two lines here just to show that

they are the equivalent of using the other method.

•

To outline the blue candies in a color not otherwise found in

candy, they are drawn in puce, which is a reddish color.

4.

Similar to the previous example, the drawing ends up

on the blue_distance image. Copy the drawing layer

back to the original image.

63

63

•

Sometimes the lighting conditions can make color

detection more difficult.

•

To resolve this problem use hueDistance() instead of

colorDistance().

•

The hue is more robust to changes in light

from SimpleCV import Color, Imageimg = Image("mandms-dark.png")

blue_distance = img.hueDistance(Color.BLUE).invert()

blobs = blue_distance.findBlobs()

blobs.draw(color=Color.PUCE, width=3)

img.addDrawingLayer(blue_distance.dl())

img.show()

1

Blob detection in less-than-ideal light

conditions (2)

64

Blob detection in less-than-ideal light

conditions (2)

64

Blobs detected with

Blobs detected with

hueDistance() colorDistance()

Lines and Circles65

Lines

66

•

A line feature is a straight edge in an image that

usually denotes the boundary of an object.

•

The calculations involved for identifying lines can be a

bit complex. The reason is

•

because an edge is really a list of (x, y) coordinates, and

any two coordinates could possibly be connected by a

straight line.

Left: Four coordinates; Center: One possible scenario for lines connecting the points;

Right: An alternative scenario

Hough transform

•

The way this problem is handled behind-the-scenes

is by using the Hough transform technique.

•

This technique effectively looks at all of the possible

lines for the points and then figures out which lines

show up the most often. The more frequent a line is,

the more likely the line is an actual feature.

68

68

n

Utilizes the Hough transform and returns a FeatureSet ofthe lines found

•

coordinates()

•

Returns the (x, y) coordinates of the starting point of the

line(s).

•

width()

•

Returns the width of the line, which in this context is the

difference between the starting and ending x coordinates

of the line.

•

height()

•

Returns the height of the line, or the difference between the

starting and ending y coordinates of the line.

•

length()

•

Returns the length of the line in pixels.

69

69

•

The example looks for lines on a

block of wood.

from SimpleCV import Imageimg = Image("block.png")

lines = img.findLines()

lines.draw(width=3)

img.show()

•

The findLines() function returns a

FeatureSet of the line features.

•

This draws the lines in green on the

image, with each line having a

width of 3 pixels.

70

70

s

•

Threshold

•

This sets how strong the edge should before it is recognized as a

line (default = 80)

•

Minlinelength

•

Sets what the minimum length of recognized lines should be.

•

Maxlinegap

•

Determines how much of a gap will be tolerated in a line.

•

Cannyth1

•

This is a threshold parameter that is used with the edge detection

step. It sets what the minimum “edge strength” should be.

•

Cannyth2

•

This is a second parameter for the edge detection which sets the

“edge persistence.”

71

71

from SimpleCV import Image

img = Image("block.png"

)

# Set a low threshold

lines = img.findLines(threshold=10)

lines.draw(width=3)

img.show(

)

Line detection at a lower threshold

Circles

72

•

The method to find circular features is called

findCircle()

•

It returns a FeatureSet of the circular features it

finds, and it also has parameters to help set its

sensitivity.

73

73

s

•

Canny

•

This is a threshold parameter for the Canny edge detector. The

default value is 100. If this is set to a lower number, it will find a

greater number of circles. Higher values instead result in fewer

circles.

•

Thresh

•

This is the equivalent of the threshold parameter for findLines(). It

sets how strong an edge must be before a circle is recognized.

The default value for this parameter is 350.

•

Distance

•

Similar to the maxlinegap parameter for findLines(). It determines

how close circles can be before they are treated as the same

circle. If left undefined, the system tries to find the best value,

based on the image being analyzed.

74

74

t

•

radius()

•

diameter()

•

perimeter()

•

It may seem strange that this isn’t called circumference,

but the term perimeter makes more sense when dealing

with a non-circular features. Using perimeter here

allows for a standardized naming convention.

75

75

•

from SimpleCV import Image

•

img = Image("pong.png")

1. circles =

img.findCircle(canny=200,thresh=250,distance=15)

2. circles = circles.sortArea()

3. circles.draw(width=4)

4. circles[0].draw(color=Color.RED, width=4)

5. img_with_circles = img.applyLayers()

6. edges_in_image = img.edges(t2=200)

7. final =

img.sideBySide(edges_in_image.sideBySide(img_with_circles)).scale(0.5)

•

final.show()

applyLayers() Render all of the layers onto the current image and return the result.

Indicies can be a list of integers specifying the layers to be used.

sideBySide() Combine two images as a side by side images.

76

76

)

Image showing the detected circles

Corners

77

•

are places in an image where two lines meet.

•

unlike edges, are relatively unique and effective for

identifying parts of an image.

•

For instance, when trying to analyze a square, a vertical line

could represent either the left or right side of the square.

•

Likewise, detecting a horizontal line can indicate either the top or

the bottom.

•

Each corner is unique. For example, the upper left corner could

not be mistaken for the lower right, and vice versa. This makes

corners helpful when trying to uniquely identify certain parts of a

feature.

•

Note: a corner does not need to be a right angle at 90

degrees

78

78

n

•

analyzes an image and returns the locations of all

of the corners it can find

•

returns a FeatureSet of all of the corner features it

finds

•

has parameters to help fine-tune the corners that

are found in an image.

79

79

e

from SimpleCV import Image= Image('corners.png')

imgimg.findCorners.show()

•

Notice that the example

finds a lot of corners

(default: 50)

•

Based on visual inspection,

it appears that there arefour main corners.

•

To restrict the number of

corners returned, we can

use the maxnum

parameter.

80

80

)

from SimpleCV import Imageimg = Image('corners.png')

img.findCorners.(maxnum=9).show()

Limiting findCorners() to a maximum of

nine corners

The XBox Kinect 81

Introduction

82

•

Historically, the computer vision market has been dominated

by 2D vision systems.

•

3D cameras were often expensive, relegating them to niche

market applications.

•

More recently, however, basic 3D cameras have become

available on the consumer market, most notably with the

XBox Kinect.

•

The Kinect is built with two different cameras.

•

The first camera acts like a traditional 2D 640×480 webcam.

•

The second camera generates a 640×480 depth map, which

maps the distance between the camera and the object.

•

This obviously will not provide a Hollywood style 3D movie, but it

does provide an additional degree of information that is useful

for things like feature detection, 3D modeling, and so on.

Installation

83

•

The Open Kinect project provides free drivers that

are required to use the Kinect.

•

The standard installation on both Mac and Linux

includes the Freenect drivers, so no additional

installation should be required.

•

For Windows users, however, additional drivers must

be installed.

•

Because the installation requirements from Open

Kinect may change, please see their website for

installation requirements at http://openkinect.org.

84

84

•

The overall structure of working with the 2D camera

is similar to a local camera. However, initializing the

camera is slightly different:

from SimpleCV import Kinect

# Initialize the Kinect

kin = Kinect()

# Snap a picture with the Kinectimg = kin.getImage()

img.show()

Kinect() constructor

(does not take any

arguments)

snap a picture

with the Kinect’s

2D camera

85

85

•

Using the Kinect simply as a standard 2D camera is a

pretty big waste of money. The Kinect is a great tool

for capturing basic depth information about an object.

•

It measures depth as a number between 0 and 1023, with 0

being the closest to the camera and 1023 being the farthest

away.

•

SimpleCV automatically scales that range down to a 0 to

255 range.

•

Why? Instead of treating the depth map as an array of numbers,

it is often desirable to display it as a grayscale image. In this

visualization, nearby objects will appear as dark grays, whereas

objects in the distance will be light gray or white.

Depth Information Extraction (2)

86

from SimpleCV import Kinect

- Initialize the Kinect

kin = Kinect()

- This works like getImage, but returns depth information

depth = kin.getDepth()

depth.show()

•

The Kinect’s depth map

is scaled so that it can

fit into a 0 to 255

grayscale image.

•

This reduces the

granularity of the

depth map.

A depth image from the Kinect

87

87

•

It is possible to get the original 0 to 1023 range

depth map.

•

The function getDepthMatrix() returns a NumPy

matrix with the original full range of depth values.

•

This matrix represents the 2×2 grid of each pixel’s

depth.

from SimpleCV import Kinect

- Initialize the Kinect

kin = Kinect()

- This returns the 0 to 1023 range depth map

depthMatrix = kin.getDepthMatrix()

print depthMatrix

88

88

from SimpleCV import Kinect

- Initialize the Kinect

kin = Kinect()

- Initialize the display

display = kin.getDepth().show()

- Run in a continuous loop forever

while (True):

- Snaps a picture, and returns the grayscale depth map

depth = kin.getDepth()

- Show the actual image on the screen

depth.save(display)

Networked Cameras 89

Introduction

90

•

The previous examples in this lecture have assumed

that the camera is directly connected to the

computer.

•

However, SimpleCV can also control Internet

Protocol (IP) Cameras.

•

Popular for security applications, IP cameras contain a

small web server and a camera sensor.

•

They stream the images from the camera over a web feed.

•

These cameras have recently dropped substantially in

price.

•

Low end cameras can be purchased for as little as for

a wired camera and for a wireless camera.

91

91

s

•

Two-way audio via a single network cable allows

users to communicate with what they are seeing.

•

Flexibility: IP cameras can be moved around

anywhere on an IP network (including wireless).

•

Distributed intelligence: with IP cameras, video

analytics can be placed in the camera itself

allowing scalability in analytics solutions.

•

Transmission of commands for PTZ (pan, tilt, zoom)

cameras via a single network cable.

92

92

)

•

Encryption & authentication: IP cameras offer secure

data transmission through encryption and authentication

methods such as WEP, WPA, WPA2, TKIP, AES.

•

Remote accessibility: live video from selected cameras

can be viewed from any computer, anywhere, and also

from many mobile smartphones and other devices.

•

IP cameras are able to function on a wireless network.

•

PoE -Power over ethernet. Modern IP cameras have the

ability to operate without an additional power supply.

They can work with the PoE-protocol which gives power

via the ethernet-cable

93

93

•

Higher initial cost per camera, except where cheaper

webcams are used.

•

High network bandwidth requirements: a typical CCTV

camera with resolution of 640x480 pixels and 10 frames

per second (10 frame/s) in MJPEG mode requires about 3

Mbit/s.

•

As with a CCTV/DVR system, if the video is transmitted over

the public Internet rather than a private IP LAN, the system

becomes open to a wider audience of hackers and hoaxers.

•

Criminals can hack into a CCTV system to observe security

measures and personnel, thereby facilitating criminal acts and

rendering the surveillance counterproductive.

94

94

)

•

Most IP cameras support a standard HTTP transport

mode, and stream video via the Motion JPEG

(MJPG) format.

•

To access a MJPG stream, use the

JpegStreamCamera library.

•

The basic setup is the same as before, except that

now the constructor must provide

•

the address of the camera and

•

the name of the MJPG file.

MJPG: video format in which each video frame or interlaced field of a digital

video sequence is separately compressed as a JPEG image.

95

95

)

•

In general, initializing an IP camera requires the

following information:

•

The IP address or hostname of the camera

(mycamera)

•

The path to the Motion JPEG feed (video.mjpg)

•

The username and password, if required.

from SimpleCV import JpegStreamCamera

- Initialize the webcam by providing URL to the camera

cam = JpegStreamCamera("http://mycamera/video.mjpg")

cam.getImage().show()

96

96

•

Try loading the URL in a web browser. It should

show the video stream.

•

If the video stream does not appear, it may be that

the URL is incorrect or that there are other

configuration issues.

•

One possible issue is that the URL requires a login to

access it.

Authentication information

97

•

If the video stream requires a username and

password to access it, then provide that

authentication information in the URL.

from SimpleCV import JpegStreamCamera

- Initialize the camera with login info in the URL

cam = JpegStreamCamera("http://admin:1234@192.168.1.10/video.mjpg")

cam.getImage().show()

98

98

•

Many phones and mobile devices

today include a built-in camera.

•

Tablet computers and both the iOSand Android smart phones can be

used as network cameras with apps

that stream the camera output to an

MJPG server.

•

To install one of these apps, searchfor “IP Cam” in the app marketplace

on an iPhone/iPad or search for “IP

Webcam” on Android devices.

•

Some of these apps are for viewing

feeds from other IP cameras, so make

sure that the app is designed as a

server and not a viewer.

IP Cam Pro

by Senstic

IP Webcam

by Pavel Khlebovich

99

Advanced Features 100

Introduction

101

•

Previous lectures introduced feature detection and

extraction, but mostly focused on common geometric

shapes like lines and circles.

•

Even blobs assume that there is a contiguous grouping of

similar pixels.

•

This lecture builds on those results by looking for more

complex objects. Most of this detection is performed by

searching for a template of a known form in a larger

image.

•

This lecture also provides an overview of optical flow,

which attempts to identify objects that change between

two frames.

102

102

s

•

Finding instances of template images in a larger

image Using Haar classifiers, particularly to identify

faces

•

Barcode scanning for 1D and 2D barcodes

•

Finding keypoints, which is a more robust form of

template matching

•

Tracking objects that move

103

103

g

•

Goal

•

learn how to use SimpleCV template matching functions

to search for matches between an image patch and an

input image

•

What is template matching?

•

Template matching is a technique for finding areas of

an image that match (are similar) to a template image

(patch).

How does it work?

104

•

We need two primary components:

•

Source image (I): The image in which we expect to find

a match to the template image

•

Template image (T): The patch image which will be

compared to the template image

•

Our goal is to detect the highest matching area:

105

105

)

•

To identify the matching area, we have to compare the

template image against the source image by sliding it:

•

By sliding, we mean moving the patch one pixel at a time

(left to right, up to down). At each location, a metric is

calculated so it represents how “good” or “bad” the match

at that location is (or how similar the patch is to that

particular area of the source image).

Bitmap Template Matching

106

•

This algorithm works by searching for instances

where a bitmap template—a small image ofthe object to be found—can be found within a

larger image.

•

For example, if trying to create a vision system to

play Where’s Waldo, the template image would

be a picture of Waldo.

•

To match his most important feature, his face, the

Waldo template would be cropped to right

around his head and include a minimal amount of

the background.

•

A template with his whole body would only match

instances where his whole body was visible and

positioned exactly the same way. The other

component in template matching is the image to

be searched.

findTemplate() function

107

•

The template matching

feature works by calling the

findTemplate() function on

the Image object to be

searched.

Pieces on a Go board

•

Next, pass as a parameter

the template of the object to

be found.

Template of black piece

108

108

)

from SimpleCV import Image# Get the template and imagegoBoard = Image('go.png')

black = Image('go-black.png')

# Find the matches and draw them

matches = goBoard.findTemplate(black)

matches.draw()

# Show the board with matches print the numbergoBoard.show()

print str(len(matches)) + " matches found."

# Should output: 9 matches found.

Matches for black pieces

109

109

)

•

The findTemplate() function also takes two optional

arguments:

•

method

•

defines the algorithm to use for the matching

•

details about the various available matching algorithms can by found

by typing help Image.findTemplate in the SimpleCV shell.

•

Threshold

•

fine-tunes the quality of the matches

•

works like thresholds with other feature matching functions

•

decreasing the threshold results in more matches, but could also result in

more false positives.

•

These tuning parameters can help the quality of results, but

template matching is error prone in all but the most

controlled, consistent environments.

•

Keypoint matching, which is described in the next section, is

a more robust approach.

110

110

s

•

Square difference matching method (CV_TM_SQDIFF)

•

These methods match the squared difference, so a perfect

match will be 0 and bad matches will be large:

•

Correlation matching methods(CV_TM_CCORR)

•

These methods multiplicatively match the template against

the image, so a perfect match will be large and bad

matches will be small or 0.

111

111

•

Correlation coefficient matching methods

(CV_TM_CCOEFF)

•

These methods match a template relative to its mean

against the image relative to its mean, so a perfect

match will be 1 and a perfect mismatch will be –1; a

value of 0 simply means that there is no correlation

(random alignments).

where

Normalized methods

112

•

For each of the three methods just described, there

are also normalized versions.

•

The normalized methods are useful because they

can help reduce the effects of lighting differences

between the template and the image.

•

In each case, the normalization coefficient is the

same:

Values of the method parameter for

normalized template matching

113

Values of the method parameter for

normalized template matching

113

•

As usual, we obtain more accurate matches (at the cost

of more computations) as we move from simpler

measures (square difference) to the more sophisticatedones (correlation coefficient).

Limitations

114

•

This approach supports searching for a wide variety of

different objects but:

•

The image being searched always needs to be larger than

the template image for there to be a match.

•

It is not scale or rotation invariant.

•

The size of the object in the template must equal the size it

is in the image.

•

Lighting conditions also can have an impact because the

image brightness or the reflection on specular objects can

stop a match from being found.

•

As a result, the matching works best when used in an

extremely controlled environment.

115

115

g

•

Tracking an object as it moves between two images is a

hard problem for a computer vision system.

•

This is a challenge that requires identifying an object in one

image, finding that same object in the second image, and then

computing the amount of movement.

•

We can detect that something has changed in an image,

using techniques such as subtracting one image from another.

•

While the feature detectors we’ve looked at so far are

useful for identifying objects in a single image, they are

often sensitive to different degrees of rotation or variable

lighting conditions.

•

As these are both common issues when tracking an object in

motion, a more robust feature extractor is needed. One

solution is to use keypoints.

116

116

•

A keypoint describes an object in terms that are

independent of position, rotation, and lighting.

•

For example, in many environments, detecting corners makegood keypoints.

•

As it was described before, a corner is made from two intersecting

lines at any angle.

•

Move a corner from the top-left to the bottom-right of the screen

and the angle between the two intersecting lines remains the

same.

•

Rotate the image, and the angle remains the same.

•

Shine more light on the corner, and it’s still the same angle.

•

Scale the image to twice its size, and again, the angle remains the

same.

•

Corners are robust to many environmental conditions and image

transformations.

117

117

)

•

Keypoints can be more than just corners, but corners

provide an intuitive framework for understudying

the underpinnings of keypoint detection.

•

The keypoints are extracted using the

findKeypoints() function from the Image library.

•

In addition to the typical feature properties, such as

size and location, a keypoint also has a descriptor()

function that outputs the actual points that are

robust to location, rotation, scale, and so on.

The extracted keypoints

A common hotel room keycard

118

The extracted keypoints

A common hotel room keycard

118

findKeypoints() example

from SimpleCV import Imagecard = Image('keycard.png')

keys = card.findKeypoints()

keys.draw()

1

card.show()

2

1.

Find the keypoints on the image using

the findKeypoints() method.

2.

Draw those keypoints on the screen.

They will appear as green circles at

each point. The size of the circle

represents the quality of the keypoint.

findKeypoints() function example (2)

119

•

The above keypoints are not particularly valuable on

their own, but the next step is to apply this concept to

perform template matching.

•

As with the findTemplate() approach, a template image

is used to find keypoint matches. However, unlike the

previous example, keypoint matching does not work

directly off the template image.

•

Instead, the process extracts the keypoints from the

template image, and then looks for those keypoints in

the target image. This results in a much more robust

matching system.

120

120

•

Keypoint matching works best when the object has a lot

of texture with diverse colors and shapes.

•

Objects with uniform color and simple shapes do not

have enough keypoints to find good matches.

•

The matching works best with small rotations, usually

less than 45 degrees. Greater or larger rotations could

work, but it will be harder for the algorithm to find a

match.

•

Whereas the findTemplate() function looks for multiple

matches of the template, the findKeypointMatch()

function returns only the best match for the template.

121

121

•

template (required)

•

The template image to search for in the target image.

•

quality

•

Configures the quality threshold for matches.

•

Default: 500; range for best results: [300:500]

•

minDist

•

The minimum distance between two feature vectors necessary in

order to treat them as a match. The lower the value, the better

the quality. Too low a value, though, prevents good matches from

being returned.

•

Default: 0.2, range for best results: [0.05:0.3]

•

minMatch

•

The minimum percentage of feature matches that must be found

to match an object with the template.

•

Default: 0.4 (40%); good values typically range: [0.3:0.7]

122

122

•

Flavor: a string indicating the method to use to

extract features.

•

“SURF” -extract the SURF features and descriptors. If

you don’t know what to use, use this.

•

http://en.wikipedia.org/wiki/SURF

•

“STAR” -The STAR feature extraction algorithm

•

http://pr.willowgarage.com/wiki/Star_Detector

•

“FAST” -The FAST keypoint extraction algorithm

•

http://en.wikipedia.org/wiki/Corner_detection#AST_based

_feature_detectors

123

123

•

shows a side-by-side image, with lines drawn between

the two Images to indicate where it finds a match.

•

If it draws lots of lines, the matching algorithm should

work.

124

124

..shows a side-by-side image,

with lines drawn between the

two Images to indicate where it

finds a match.

..If it draws lots of lines, the

matching algorithm should

work.

from SimpleCV import Imagetemplate = Image('ch10-card.png')

img = Image('ch10-card-on-table.png')

match = img.findKeypointMatch(template)

match.draw(width=3)

img.show()

draw a box around the detected object.

125

125

•

Note that keypoint matching only finds the single

best match for the image. If multiple keycards were

on the table, it would only report the closest match.

The detected match for the keycard

http://www.youtube.com/watch?v=CxPeoQDc2-Y

126

126

w

•

The next logical step beyond template matching is to

understand how to track objects between frames.

•

Optical flow is very similar to template matching in that

it takes a small area of one image and then scans the

same region in a second image in an attempt to find a

match.

•

If a match is found, the system indicates the direction of

travel, as measured in (X, Y) points.

•

Only the two-dimensional travel distance is recorded. If

the object also moved closer to or further away from

the camera, this distance is not recorded.

127

127

n

•

computes the optical flow

•

has a single required parameter,

•

previous_frame, which is the image used for

comparison.

•

The previous frame must be the same size.

128

from SimpleCV import Camera, Color, Displaycam = Camera()

previous = cam.getImage()

disp = Display(previous.size())

while not disp.isDone():

current = cam.getImage()

motion = current.findMotion(previous)

for m in motion:

m.draw(color=Color.RED,normalize=False)

current.save(disp)

previous = current Draw the little red motion

lines on the screen to

indicate where the motion

occurred.

128

from SimpleCV import Camera, Color, Displaycam = Camera()

previous = cam.getImage()

disp = Display(previous.size())

while not disp.isDone():

current = cam.getImage()

motion = current.findMotion(previous)

for m in motion:

m.draw(color=Color.RED,normalize=False)

current.save(disp)

previous = current Draw the little red motion

lines on the screen to

indicate where the motion

occurred.

129

129

e

Optical flow example

•

The findMotion function supports several different algorithms to detect

motion. For additional information, type help Image in the SimpleCV shell.

http://www.youtube.com/watch?v=V4r2HXGA8jw

130

130

s

•

previous_frame -The last frame as an Image.

•

window -The block size for the algorithm. For the the HS and LK

methods this is the regular sample grid at which we return motion

samples. For the block matching method this is the matching windowsize.

•

method -The algorithm to use as a string. Your choices are:

•

‘BM’ -default block matching robust but slow -if you are unsure use this.