Difference between revisions of "Digital Signal Processing"

| (16 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

Students at DeKUT are currently taught digital signal processing (DSP) in the final year of their five year program. The aim of the course is to introduce the student to a number of fundamental DSP concepts including: | Students at DeKUT are currently taught digital signal processing (DSP) in the final year of their five year program. The aim of the course is to introduce the student to a number of fundamental DSP concepts including: | ||

| + | |||

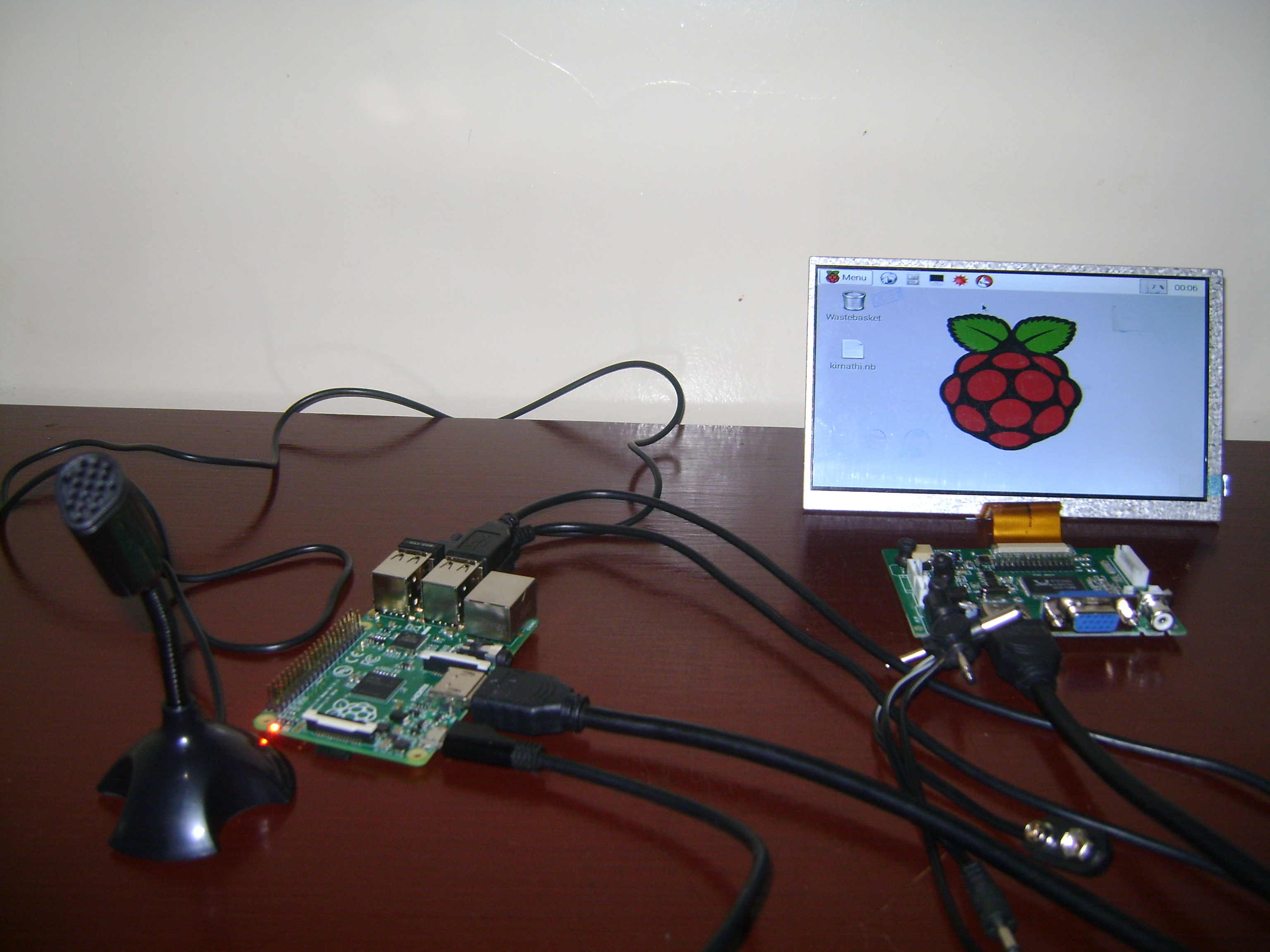

| + | [[File:Boot.JPG|thumb|Raspberry Pi and accessories.]] | ||

#Discrete time signals and systems | #Discrete time signals and systems | ||

| Line 16: | Line 17: | ||

<span style="font-size:larger;">'''Background'''</span> | <span style="font-size:larger;">'''Background'''</span> | ||

| − | Human speech is arguably one of the most important signals encountered in engineering applications. Numerous devices record and manipulate speech signals to achieve different ends. To properly manipulate the signal, it is important to have an understanding of the speech production process. The lungs, vocal tract and vocal cords all play an important role in speech production. The speech production model consists of an input signal from the lungs and a linear filter. In this model, the input is a white noise process which is spectrally flat. This input is then spectrally shaped by a filter which models the properties of the vocal tract. Since the properties of the vocal tract are constantly changing as different sounds are produced, the filter is time varying. However, the filter is often modelled as quasi-stationary with filter parameters constant over a period of approximately | + | Human speech is arguably one of the most important signals encountered in engineering applications. Numerous devices record and manipulate speech signals to achieve different ends. To properly manipulate the signal, it is important to have an understanding of the speech production process. The lungs, vocal tract and vocal cords all play an important role in speech production. The speech production model consists of an input signal from the lungs and a linear filter. In this model, the input is a white noise process which is spectrally flat. This input is then spectrally shaped by a filter which models the properties of the vocal tract. Since the properties of the vocal tract are constantly changing as different sounds are produced, the filter is time varying. However, the filter is often modelled as quasi-stationary with filter parameters constant over a period of approximately 32ms. When the vocal cords vibrate as is the case when pronouncing the sound /a/ in cat, we say that the sound is voiced and in this case the signal is seen to be exhibit some periodicity. When the vocal cords do not vibrate the sound is unvoiced. |

| − | |||

| − | When the vocal cords vibrate as is the case when pronouncing the sound /a/ in cat, we say that the sound is voiced and in this case the signal is seen to be exhibit some periodicity. When the vocal cords do not vibrate the sound is unvoiced. | ||

| − | |||

| + | [[File:Mic.JPG|thumb|USB Microphone.]] [[File:Monitor.JPG|thumb|7 inch HDMI monitor.]] | ||

'''Estimation of Fundamental Frequency''' | '''Estimation of Fundamental Frequency''' | ||

| − | When speech is voiced, it is seen to exhibit periodicity and it is often important in speech applications to estimate the pitch of these signals. To achieve this, we estimate the ''fundamental frequency'' of this signal also refered to as ''F0''. A popular method for estimation of ''F0'' is based on the autocorrelation function (ACF). This function measures the similarity between samples at particular times and those obtained at particular time lags. If the signal is periodic we expect this function to have peaks at lags equivalent to integer multiples of the signal period in addition to a peak at zero lag. | + | When speech is voiced, it is seen to exhibit periodicity and it is often important in speech applications to estimate the pitch of these signals. To achieve this, we estimate the ''fundamental frequency'' of this signal also refered to as ''F0''. A popular method for estimation of ''F0'' is based on the autocorrelation function (ACF). This function measures the similarity between samples at particular times and those obtained at particular time lags. If the signal is periodic we expect this function to have peaks at lags equivalent to integer multiples of the signal period in addition to a peak at zero lag. If we form a finite duration signal and compute the ACF, we notice that it has peaks at lags corresponding to integer multiples of the period. To apply this method to a speech signal, we compute the ACF of a finite duration signal corresponding to a speech segment 32ms long. Over this short segment the characteristics of the signal can be assumed to be stationary. |

| − | |||

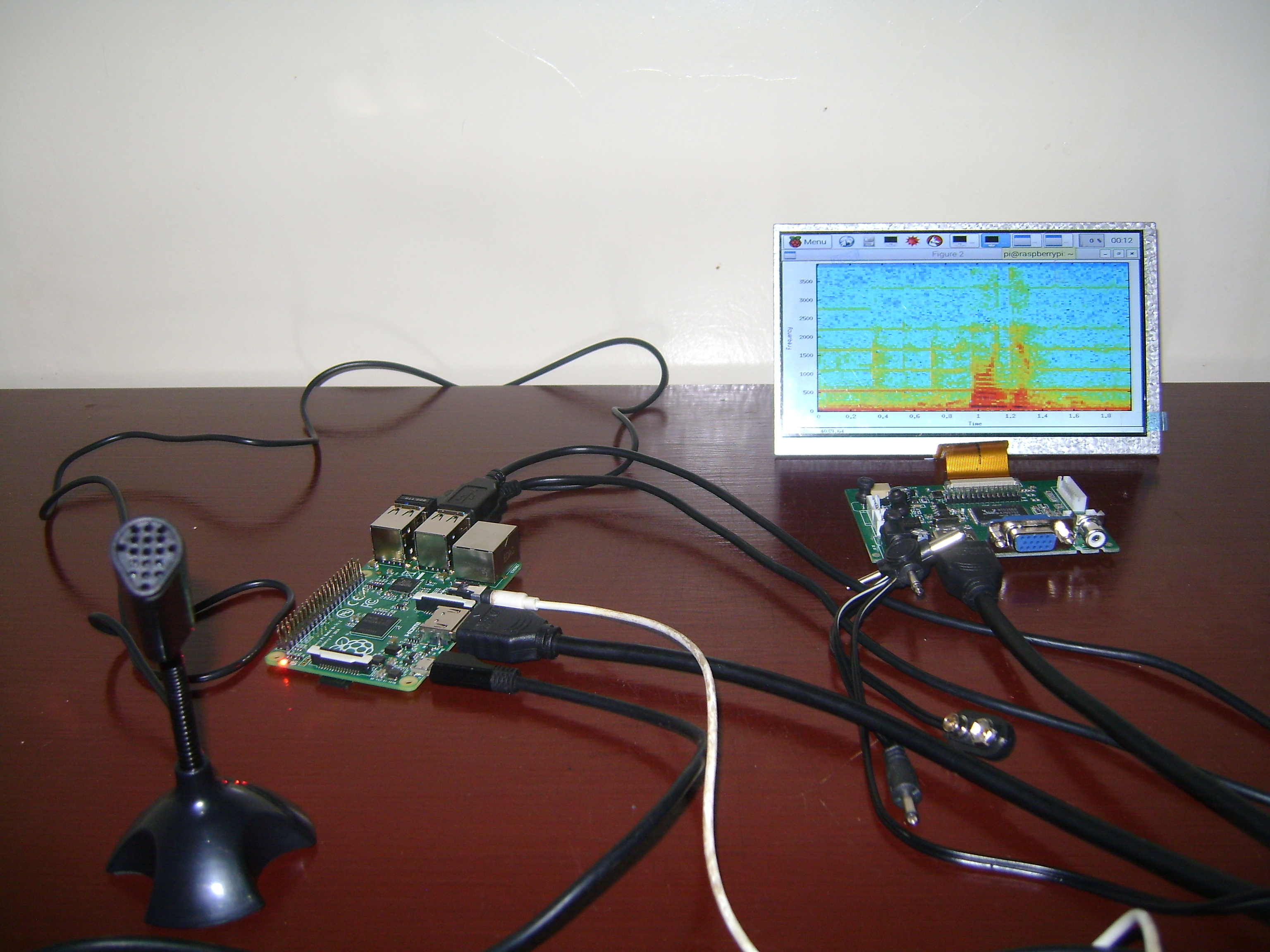

| − | + | '''<span style="font-size:larger;">Preliminary Lab Development</span>''' [[File:Spec.JPG|thumb|Complete experimental setup. A spectrogram of a recorded utterance is shown on the monitor.]] | |

| − | '''<span style="font-size:larger;">Preliminary Lab Development</span>''' | ||

Initial work has been done to test the proposed lab. Since the Raspberry Pi runs a linux OS, we tested the lab by organising a voluntary workshop which required students to bring their own laptops running linux (Ubuntu). In addition, the computers were loaded with | Initial work has been done to test the proposed lab. Since the Raspberry Pi runs a linux OS, we tested the lab by organising a voluntary workshop which required students to bring their own laptops running linux (Ubuntu). In addition, the computers were loaded with | ||

| Line 39: | Line 36: | ||

Fourth year students were invited to attend the workshop and 27 students registered (we used google forms). Of these, 15 students attended the workshop which was held on 24th January, 2015. | Fourth year students were invited to attend the workshop and 27 students registered (we used google forms). Of these, 15 students attended the workshop which was held on 24th January, 2015. | ||

| − | A worksheet | + | A worksheet was prepared for use by the students and to guide them through the lab. It was designed to be hands on with students making recordings of their own voice and manipulating the recorded audio on Octave. |

| + | |||

| + | After the workshop, an anonymous online survey [https://www.surveymonkey.com/s/JD7JTJ5 [1]] was conducted using surveymonkey to get feedback and help improve future workshops. The results of the survey were used to fine tune the Raspberry Pi labs. | ||

| + | |||

| + | <br/><span style="font-size:larger;">'''Raspberry Pi Based Laboratory'''</span> | ||

| + | |||

| + | Here we give a general description of the raspberry pi based laboratory exercise aimed at estimating fundamental frequency of a recorded speech segment. | ||

| + | |||

| + | <span style="font-size:medium;">'''Equipment'''</span> | ||

| + | |||

| + | |||

| + | |||

| + | #Raspberry Pi Model B+ | ||

| + | #USB Microphone | ||

| + | #HDMI Monitor | ||

| + | #Raspberry Pi Compatible Keyboard and Mouse | ||

| + | |||

| + | |||

| + | |||

| + | <span style="font-size:medium;">'''Set-up'''</span> | ||

| + | |||

| + | To run the lab, we must install the following software (these will require an internet connection which can be achieved using a WiFi dongle) | ||

| + | |||

| + | #Octave: Type sudo apt-get install octave | ||

| + | #Octave signals package: Type sudo apt-get install octave-signal | ||

| + | #SoX: Type sudo apt-get install sox | ||

| + | |||

| + | |||

| + | |||

| + | '''<span style="font-size:large;">Laboratory Exercises</span>''' | ||

| + | |||

| + | There will be three laboratory exercises. They are | ||

| + | |||

| + | '''1. Introduction to Octave.''' | ||

| + | |||

| + | Here the students will be introduced to the Octave programming language. The laboratory manual can be downloaded here [[File:DSP lab1.pdf|RTENOTITLE]] | ||

| + | |||

| + | '''2. Speech in the time and frequency domain''' | ||

| + | |||

| + | This is an introduction to speech processing in the time and frequency domains. The laboratory manual can be downloaded here [[File:DSP lab2.pdf|RTENOTITLE]] | ||

| + | |||

| + | '''3. Estimation of Fundamental Frequency''' | ||

| − | + | In this lab, students will be introduced to extraction of parameters from a speech signal. The laboratory manual can be downloaded here [[File:DSP lab3.pdf|RTENOTITLE]] | |

| + | [[Category:Pages with broken file links]] | ||

Latest revision as of 14:40, 19 August 2015

Students at DeKUT are currently taught digital signal processing (DSP) in the final year of their five year program. The aim of the course is to introduce the student to a number of fundamental DSP concepts including:

- Discrete time signals and systems

- Linear time invariant (LTI) systems

- Frequency-domain representation of discrete time systems

- z-transform

- Sampling of continuous time signals

- Filter design

- The discrete Fourier transform

Currently, the laboratory exercises in this course are Matlab based and focus on learning how to manipulate discrete signals, plot frequency responses of digital LTI systems and design digital filters. These exercises are designed to ensure the students understand the theory of DSP.

We propose to design a Raspberry Pi based DSP laboratory which will further enhance the understanding of these concepts by exposing the students to the processing of the human voice. A large number of DSP applications deal with speech processing and are now found in modern day electronics. These include speaker identification and speech identification. We aim to introduce the students to speech processing using a simple example, the estimation of fundamental frequency in a speech segment. It is hoped that this will motivate the students to explore more advanced applications such as speech recognition.

Background

Human speech is arguably one of the most important signals encountered in engineering applications. Numerous devices record and manipulate speech signals to achieve different ends. To properly manipulate the signal, it is important to have an understanding of the speech production process. The lungs, vocal tract and vocal cords all play an important role in speech production. The speech production model consists of an input signal from the lungs and a linear filter. In this model, the input is a white noise process which is spectrally flat. This input is then spectrally shaped by a filter which models the properties of the vocal tract. Since the properties of the vocal tract are constantly changing as different sounds are produced, the filter is time varying. However, the filter is often modelled as quasi-stationary with filter parameters constant over a period of approximately 32ms. When the vocal cords vibrate as is the case when pronouncing the sound /a/ in cat, we say that the sound is voiced and in this case the signal is seen to be exhibit some periodicity. When the vocal cords do not vibrate the sound is unvoiced.

Estimation of Fundamental Frequency

When speech is voiced, it is seen to exhibit periodicity and it is often important in speech applications to estimate the pitch of these signals. To achieve this, we estimate the fundamental frequency of this signal also refered to as F0. A popular method for estimation of F0 is based on the autocorrelation function (ACF). This function measures the similarity between samples at particular times and those obtained at particular time lags. If the signal is periodic we expect this function to have peaks at lags equivalent to integer multiples of the signal period in addition to a peak at zero lag. If we form a finite duration signal and compute the ACF, we notice that it has peaks at lags corresponding to integer multiples of the period. To apply this method to a speech signal, we compute the ACF of a finite duration signal corresponding to a speech segment 32ms long. Over this short segment the characteristics of the signal can be assumed to be stationary.

Preliminary Lab Development

Initial work has been done to test the proposed lab. Since the Raspberry Pi runs a linux OS, we tested the lab by organising a voluntary workshop which required students to bring their own laptops running linux (Ubuntu). In addition, the computers were loaded with

- Octave- A high level language suitable for numerical computations that is quite similar to Matlab. We will also require the signals package.

- SoX - Sound eXchange, the Swiss Army knife of audio manipulation

Fourth year students were invited to attend the workshop and 27 students registered (we used google forms). Of these, 15 students attended the workshop which was held on 24th January, 2015.

A worksheet was prepared for use by the students and to guide them through the lab. It was designed to be hands on with students making recordings of their own voice and manipulating the recorded audio on Octave.

After the workshop, an anonymous online survey [1] was conducted using surveymonkey to get feedback and help improve future workshops. The results of the survey were used to fine tune the Raspberry Pi labs.

Raspberry Pi Based Laboratory

Here we give a general description of the raspberry pi based laboratory exercise aimed at estimating fundamental frequency of a recorded speech segment.

Equipment

- Raspberry Pi Model B+

- USB Microphone

- HDMI Monitor

- Raspberry Pi Compatible Keyboard and Mouse

Set-up

To run the lab, we must install the following software (these will require an internet connection which can be achieved using a WiFi dongle)

- Octave: Type sudo apt-get install octave

- Octave signals package: Type sudo apt-get install octave-signal

- SoX: Type sudo apt-get install sox

Laboratory Exercises

There will be three laboratory exercises. They are

1. Introduction to Octave.

Here the students will be introduced to the Octave programming language. The laboratory manual can be downloaded here File:DSP lab1.pdf

2. Speech in the time and frequency domain

This is an introduction to speech processing in the time and frequency domains. The laboratory manual can be downloaded here File:DSP lab2.pdf

3. Estimation of Fundamental Frequency

In this lab, students will be introduced to extraction of parameters from a speech signal. The laboratory manual can be downloaded here File:DSP lab3.pdf